06 Oct 2011

So it is now time to present the results obtained during the first year of research and development on the Flux of Meme project, and I was glad to fly to Milan for the presentation at Telecom Italia last friday 30th. Thanks-a-mil to Laurent-Walter Goix and Carlo Alberto Licciardi at Telecom for the constant support, reviews and recommendations: it immensely helped to achieve this result. And thanks-two-mils to Giuseppe Serra and Marco Bertini (also with the help of Federico Frappi) at the Media Integration and Communication Center for the help provided in the definition and fine-tweaking of algorithms. Looking forward to starting Flux phase 2!

This is a quick keynote that highlights the main elements of this geo-clustering and topic extraction tool, using twitter as a main data source but willing to expand to proper context-based data heterogeneous sources.

[slideshare id=9492782&doc=fom110930-110930171037-phpapp02]

Read more

11 Sep 2011

A year has passed since the beginning of the trial of Flux of MEME, the project I have presented during the Working Capital tour, and it is now time to analyze what has been learned and show what has been developed to conclude this R&D phase and deliver results to Telecom Italia.

the initial idea

It’s worthwhile giving a quick description of the context: Twitter is a company formed in 2006 which has received several rounds of funding by venture capitals over the past few years, this leading to today's valuation of $1.2B, still during the summer of 2009 the service was not yet mature and widespread as it may look now. At that time the development of the Twitter API had just started, this probably being one of the few sources, if not the only one, for geo-referenced data. The whole concept of communication in the form of public gossip, mediated by a channel that accepts 140 characters per message, was appearing in the world of social networks for the first time.

This lead to the base idea of crunching this data stream, which most importantly include the geographical source, then summarize the content, so as to analyze the space-time evolution of the concepts described and, ultimately, make a prediction of how they could migrate in space and time.

A practical use

It could allow you to control and curb the trend of potentially risky situations (such as social network analysis has been useful during the recent riots in London) or even define marketing strategies targeted to the local context.

The implementation

A consistent initial phase of research allowed to have an overview on different aspects: the ability to capture the information from Twitter, the structure of captured data, the ability to obtaining geo-located information, the classification of languages of the tweets, the enrichment of content through discovery of related information, the possible functions for spatial clustering, the algorithms for topic extraction, the definition of views useful for an operator and finally the ability to perform a trend analysis on the information extracted. All of this has resulted in a substantial amount of programming code, its outcome being a demonstrator for the validity of the initial theory.

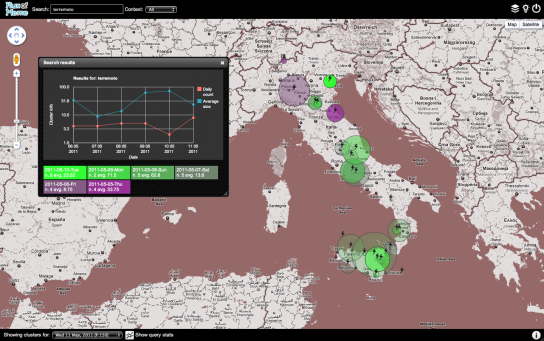

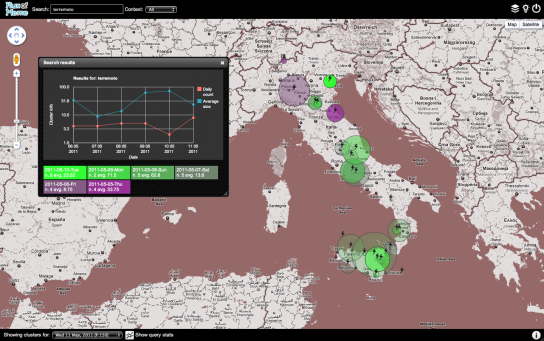

space-time evolution of the concept "earthquake" in a limited subset of data captured during the period May 2011"

space-time evolution of the concept "earthquake" in a limited subset of data captured during the period May 2011"

Read more

31 Jul 2011

Few days ago I started the server setup for a web project @therumpusroom_ and, after receiving the traffic estimates, I thought a single Apache server was not enough to handle the expected load of visitors. For several reasons I want to avoid using a load balancer and multiple Apache instances, hence the decision to implement Nginx with MySql running on a separate dedicated server.

The whole infrastructure lives on Amazon Web Services and the web application - still under development - will rely on CodeIgniter. I have read quite a lot of articles on-line and stolen bits and pieces of configuration files, but none of them entirely reflected what I needed. I feel it is quite a common configuration hence I am writing down here the required steps and some code snippets, both for my personal records and also hoping it can be helpful for someone else with similar issues.

The premise: implement a CodeIgniter installation on Amazon EC2 with a dedicated DB server and content delivery network for rich media distribution.

Pre-requisites / specs: Ubuntu 11.10 Oneiric Ocelot 64bit with Nginx web server running on a large instance on Amazon EC2, dedicated MySQL server on Amazon RDS and Cloudfront CDN.

The steps:

1. choose your Ubuntu installation

I ended up choosing Oneiric Ocelot 64bit, I am always too tempted to try the latest, anyhow you can always find your own Ubuntu AMI using the super helpful AMI locator for EC2

2. start a basic NGINX installation

I used this guide on Linode to configure Nginx and PHP-FastCGI on Ubuntu 11.04 (Natty) as a starting point, just be aware of the following:

- ignore the hostname configuration: it did not work for me and it is not relevant to make the web server work properly

- start with the suggested config for nginx, but keep in mind you will need to finalize it later

also the init.d/php-fastcgi script in the Linode guide gave errors and was not working properly for me, so I have created a simpler version (you may need to manually create pid/socket folders before running the script the first time):

PHP_SCRIPT=/usr/bin/php-fastcgi

PID_DIR=/var/run/php-fastcgi

PID_FILE=/var/run/php-fastcgi/php-fastcgi.pid

SOCKET_FILE=/var/run/php-fastcgi/php-fastcgi.socket

RET_VAL=0

Read more

space-time evolution of the concept "earthquake" in a limited subset of data captured during the period May 2011"

space-time evolution of the concept "earthquake" in a limited subset of data captured during the period May 2011"